Data and evidence

Without measurement we are guessing.

Data driven

Data is at the core of all our programmes. We believe that without quality evidence, we will not understand what does and does not work to maximise the potential impact for children. Only by investing in data and evidence can we measure the impact of what we do.

Periodically, we post open evaluation opportunities in order to solicit proposals. To see current opportunities, download requests for proposals (RFPs) and find information on how to apply, visit our Open Evaluation Opportunities page.

We have a team of dedicated Evidence, Measurement and Evaluation (EME) specialists who provide internal expertise and oversee a portfolio of third party evaluations to:

- Assess evidence to inform decision making and generate fresh credible evidence where needed.

- Ensure robust monitoring systems to support programme investments and generate data that can be used for decision making, including course-correction with partners where necessary.

- Evaluate impact of our investments, both for learning and accountability purposes.

- Improve and disseminate knowledge to support greater impact.

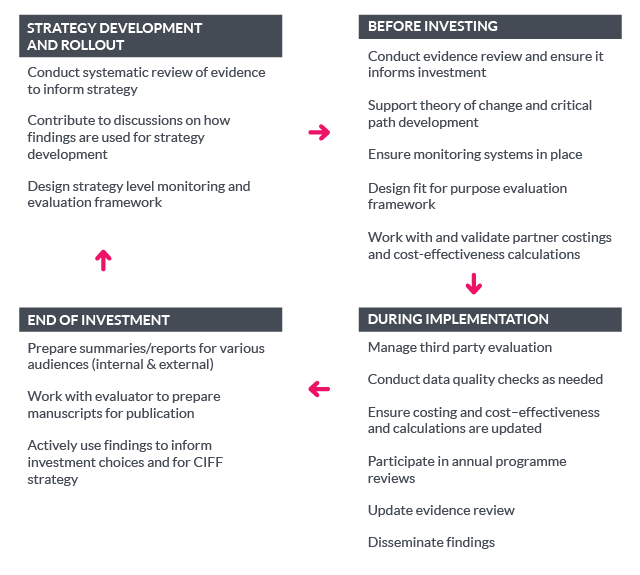

The EME team plays an important role throughout the programme investment lifecycle:

CASCADE TO IMPACT

A key tool that we use to summarise the Theory of Change of a given investment, together with key drivers of success and outcomes is the Cascade to Impact. These are dynamic live tools, continuously reviewed with our partners to course-correct our approach to achieving lasting impact. This short animated video provides practical guidance on how we use the tool.

Evaluations

There are a number of principles we apply to measuring and evaluating our grant portfolio of programme investments.

Design

- Clear programme logic and hypothesis of change are essential to design effective monitoring and evaluation frameworks.

- Knowing ‘how’ change happens is just as important as knowing whether there is change.

- The audience for the evaluation should be identified at the outset.

- Planning for measurement and evaluation needs to be built in early, and agreed with partners during the design and investment phase.

- It is important to plan for the dissemination of evaluation findings (even if the results are ultimately not statistically significant) so that we can share what we are learning.

Implementation

- It is important to have the evaluation partner involved in programme work planning sessions.

- As far as possible, efforts should be made to enhance existing data systems and improve their quality as opposed to generating a parallel system to meet short-term data requirements.

- The success of evaluations is determined by how useful they are in advancing effective development efforts.